The Internet of Things is slowly becoming real: let’s discover a long-range wireless technology that is particularly suitable to create simple and cheap wireless networks, that are able to connect billions of intelligent items among them.

Depending on the estimates, by 2020 the number of Internet-connected devices will be between 20 and 65 billions: for the most part they will be items capable of interacting with the immediate surroundings, that will exchange data among them and with an information infrastructure in order to improve the manufacturing processes, energy production and distribution, and logistics; supplying connectivity and intelligence to the items allows to optimise the available resources, thus making our lives more pleasant and less tiring. That’s the new paradigm of this decade, commonly known as the Internet of Things, that will unfold his effects in the next 5-10 years with implications we have yet to find out; and surely there will be some negative aspects but also some great opportunities for people and companies that will be able to seize them. Surely, so to give an example, the gas meter reader job will disappear (and this represents a negative consequence in the immediate future) but as a whole, the efficiency of the system will improve, thus creating new job opportunities.

When dealing with the Internet of Things, the thought goes immediately to wireless networks (2G/3G and now even LTE/4G) we are all accustomed to use for normal telephone calls, to send SMSs and, always more, for the connection to Internet services, going from e-mails to web surfing, to the filing of documents. Everything happens by means of intelligent terminals, from smartphones to tablets, to PCs having wireless access. Surely mobile technology is essential and very useful for many applications in the IoT and M2M fields (let’s think, as an example, to fleet-management); there are, however, as many applications for which this technology is not suitable, specially when the item to be connected has to save energy since it is battery powered, and also has to send little information and maybe it must have a very low cost. As an example, let’s think to a smoke detector for a fire system, or to a temperature and humidity sensor in a greenhouse; the smoke detector has to send a pair of messages per day to say it is “alive” and an alarm signal in case of fire. For such items, that represent a great share of the “Things” to connect to a network, an ad hoc created network is surely more suitable; it can be easily scaled and as easily implemented: and it is surely by far cheaper than a GSM network. However, to transfer this vision in the real world it is necessary that the radio connection range is of around 3-30 kilometers: only in this way the dedicated network can be created in a very short time and with particularly limited resources.

Alright, but how to assure this kind of ranges (in the long-range) with reduced consumptions and transmission powers that are as limited, and maybe so low to allow the usage of those frequencies that do not need any concession such as, for example, 868 MHz in Europe and 915 MHz in the United States?

In the last 3-4 years, research in this sector has brought astonishing results, that enable a single (and very simple) receiver to manage more than a million transmitters within an operating range that may vary from a few kilometers (in the most densely urbanized areas) up to 15-30 kilometers in rural areas. The pioneers in this field have been two companies, Semtech and SigFox, that have developed low-cost wireless systems capable of reaching these unbelievable results. The French company SigFox (a start-up born in 2010) mainly committed itself to the creation of long-range networks covering whole nations, and leaving to partner companies the manufacturing of devices (chips and modules) to be used for the creation of the terminals; recently, Atmel joined the chip manufacturers that are already active in this sector, and on the occasion of Electronica 2014 it presented the first SoC certified by SigFox, a low-cost device having a particularly limited size that will surely give a considerable impulse to this technology and to the networks currently being developed.

To increase the range of a radio system, it is possible to operate on the transmitter’s power, on the sensitivity of the receiver, or on both aspects. In the case of applications for IoT, the power produced cannot exceed 10-25 mW, so to comply with the rules that regulate the usage of ISM frequencies, and above all to limit consumption. To use again the example of the smoke detector, it is also true that the transmitter remains operative for a few seconds per day, after which it enters sleep mode and absorbs no more than a few nanoamperes, but we have to consider that in many cases the battery has to guarantee autonomy for 10-20 years. For this reason it is not possible to go beyond the said power values. On this subject, it also has to be noticed that it is not possible to continuously transmit on the ISM frequencies, but there is a limited “active time”; this is also one of the reasons why SigFox chose a maximum of 140 messages per day for each item. Going back to talk about the radio system, there is nothing left to do but to operate on the receiver’s sensitivity, and it is possible to do so by following different paths: SigFox chose the Ultra Narrow Band technology, Semtech chose Spread Spectrum. Both technologies aim to reduce the noise (locally produced and/or picked up from the immediate surroundings), so to increase the sensitivity. With these technologies and specific software algorithms, it was possible to reach sensitivities ranging between -126 dBm e -138 dBm, and performances also connected to the very low data transmission speed, that goes between about 100 e 300 bit per second. For most applications this low speed does not influence the performances of the network. In this article we will specifically deal with the technology and with the SigFox network, for two main reasons: the availability of a quite wide coverage at European level, with the first gateways that are going to be installed in Italy as well; and the availability of low-cost chips (such as the one by Atmel) with which to create the first interesting applications.

One Network A billion dream: this is the slogan by SigFox, the young French company that was the first to believe in a network specifically destined to items. It is a simple, cheap, scalable and easily implemented network. In the Figure shown we can see the configuration of this network with a series of radio gateways, capable of interacting with the items (a million and more for each gateway) that are found in the radio coverage area. The data received is sent via GSM or fixed telephone network to a central storage and processing system, and then “distributed” to the Clients by means of Internet APIs, capable of automatizing the devices management and of implementing the data integration. The infrastructure is so simple and inexpensive that the coverage of the whole French territory has been completed in a little more than a year. By using local partners, SigFox has completed the coverage in Netherlands, Spain, United Kingdom and part of Russia as well, and is making agreements with various other companies, capable of creating facilities in the whole of Europe and also in some zones of the United States of America.

Ultimately, therefore, a dedicated network being destined to IoT must present the following features:

- Very low terminal energy consumption

- Very long range radio connection, so to reduce the number of gateways

- It must be cheap and easily integrated and scalable

- It must be safe and reliable to avoid vulnerabilities.

All of these requirements are fully guaranteed by the devices and by the SigFox Infrastructure. As for the coverage of France, for example, less than one thousand gateways have been needed. The possibility to have a dedicated facility available, in order to give connectivity to whatever physical object, allows a multitude of opportunities, from the optimization of existing processes up to the creation of completely new businesses. The use of connected items is not surely new, but the sector’s growth is rapidly accelerating: it is calculated that the IoT will generate earnings for 1200 billions by 2020, in comparison with the 200 billions of today. The connected items are often simple, isolated and battery-operated, with sensors that detect certain events or pieces of information and send them to a centralized information system, ten or a hundred times per day.

The information may concern anything, from the energy consumption to temperature, humidity, position, presence detection, health data and so much more. The challenge for the traditional network connectivity suppliers lies in their capability of providing adequate solutions for these products; solutions that often cannot be provided by the existing technologies, be it as regards the energy consumption or the costs. Nowadays, it is clear that the applications connected to the Internet of Things have requirements that are very different from those of cellular phones and smartphones; in this last case we are moving along a path of a greater bandwidth and a greater processing capability, at the expense of the battery duration and of costs that are in any case high, and unacceptable for facilities with billions of connected items.

The other possibility, that of satellite connectivity, has the same problems of high costs and energy consumption, both being inconsistent with the development of widespread low-cost networks. Even the short-range connectivity solutions (Wi-Fi, ZigBee, ecc.) have a high energy consumption and are very complex to manage. Wi-Fi connectivity, as an example, requires the configuration of each item, while facilities like ZigBee require a high number of concentrators, with consequent increased complexity as for installation and maintenance, and even higher energy consumption. Finally, therefore, we return to the features of the SigFox network and, more in general, of the “low power long range” systems, otherwise known as LPWA (Low Power Wide Area), that turn out to be the most suitable for a rapid development of IoT facilities. Technically speaking, even a GSM/GPRS/LTE cellular system could be defined as long range, but it surely isn’t low-power. Not considering the fact that, on the contrary of GSM and satellite systems, LPWA devices operate on free radio frequencies that do not require public concessions.

The rapid deployment of solutions that are destined to the Internet of Things therefore requires dedicated facilities with long range but reduced consumption of about a few milliwatts (as for transmission), so to assure many years of operation without any kind of maintenance.

It has been calculated that a SigFox terminal with a last generation chip, powered by two 2.700 mA AA batteries, is capable of operating for about 20 years, sending 140 messages per day.

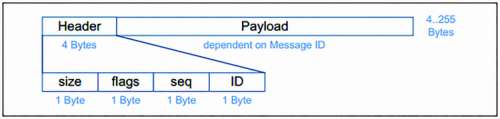

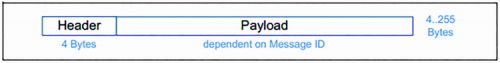

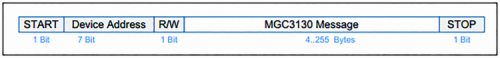

From the technical point of view, the network by SigFox considers a maximum of 140 messages sent per connected item, each day. Each message is made by a 12 bytes payload; in the case of longer data it is divided in more messages, so to respect the expected 12 bytes. Each device is identified by a 32-bit ID and by a PAC that can be used only once to register the SigFox ID.

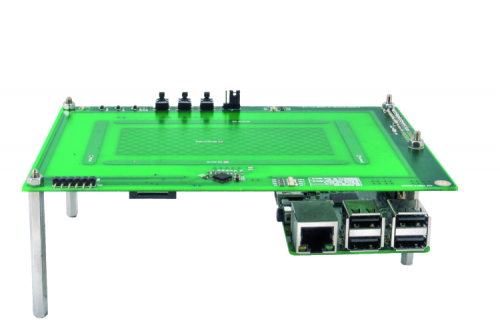

The new Atmel devices, and the ATA8520 chip in particular, integrate a low consumption TX for both transmission (a mere 32,7mA) and OFF mode (a mere 5 nA); the chip may operate with a voltage between 1,9 and 3,2 V and it is capable of supplying +14,5 dBm of RF power. Moreover the device integrates the SigFox stack, the ID, the PAC and it is predisposed for a cryptographic AES system, capable of guaranteeing the maximum security from this point of view. Communication is carried out by means of a SPI port and the chip requires about 10 external components to operate.

In the classic configuration, this device is controlled by a micro 8-bit AVR (ATmega328P).

These are the components that we will use in the next long-range IoT projects that we have in the planning stage.

Stay Tuned!